Disclaimer the first: The following post reflects my position and my position only. It does not involve the office of Learning Technologies in any way.

Disclaimer the second: When discussing AI, I am mostly talking about LLMs, not all forms of automation.

Disclaimer the third: There’s no such thing as AI. As Emily Bender and Alex Hanna describe in their book, The AI Con (COD Library link), a book y’all should read, AI is not a scientific term. It’s not an engineering term. It’s a marketing term.

For Starters…

Back in June, I went to The Teaching Professor Conference. I’ve been going for years. I have presented there as well. I’ve always considered it the most useful conference. It’s specifically for higher ed teachers whereas disciplinary conferences tend to be more for recruitment and research.

So, I went and was appalled by the state of things. In previous years, you’d have almost a whole hotel ballroom for publishers, showcasing the latest in the scholarship of teaching and learning. You could find a lot on the latest innovations in higher ed teaching, new tech tools, new advances in the science of learning. I always came home with a bunch of books, and a real sense of excitement about the craft of teaching.

But not this year. This year, AI had sucked the life out of the whole thing. There were no publishers present, no new research, no new cool tool showcased. Only AI, and a few other sessions on DEI-related stuff.

And even more depressing was the total embrace by the presenters and attendees of LLMs. No a shred of critical thinking was to be found.

I left bummed out and dejected.

The Big Picture on What’s Wrong with LLMs

There are aspects of the “wrongness” of LLMs that are not up for debate.

First, as I have previously discussed and is now well-known, LLMs, and the data centers they require, are devastating for the environment. The amount of water and energy required to train, deploy, and use the models is enormous and unsustainable.

Second, LLMs are based on intellectual property theft. The major LLM companies do not even deny this. Their leaders have explicitly stated that if they had to abide by copyright laws, their business model would collapse. LLMs require hoovering more and more data, which include copyrighted content for which the creators have not provided consent, and for which they receive neither credit, nor compensation. There are pending lawsuits on this.

Third, LLMs (or any technology, for that matter) are not neutral. The leaders, founders, and funders (including but not limited to Peter Thiel, Elon Musk, Sam Altman, Mark Zuckerberg, and resident “philosopher” Curtis Yarvin) tend to subscribe to ideologies that I would qualify as dangerous and anti-human. To unpack this, y’all need to familiarize yourselves with TESCREAL. I have read it described as data eugenics in other places. Again, the technologies they fund and develop reflect these ideologies. Zuckerberg envisions a future where most of your friends will be AI. You might also want to read Careless People (COD Library link) as well as Karen Hao’s Empire of AI (author’s website, doesn’t look like our library has it). If one wanted to be flippant, one could argue that the pronouncements from these funders and leaders are very reminiscent cargo cult-type of beliefs.

Fourth, the entire chain of training, testing, and deployment of LLMs involves massive exploitation at every point. We’ve already seen that there is exploitation of natural resources as well as intellectual property. However, we also need to take into consideration what is called Reinforcement Learning with Human Feedback (RLHF). This is the technique used to determine whether an LLM input is likely to return offensive or illegal output. LLMs need humans to make those determinations. This is similar to all the moderation done on social media platforms to determine whether or not to allow content to be published. To do that work, the major AI companies subcontract companies in the Global South, especially in countries suffering from massive poverty, so workers are paid by a few cents per task to review horrific or depraved content. These workers are called ghost workers. They are the truly invisible hands in the Silicon Valley machines. There is an excellent book published on this, appropriately titled Ghost Work (COD Library link). Kanopy also has a film on the same topic, Ghost Workers (Kanopy link).

It is this exploitation of the environment, intellectual property, and labor that has led multiple research to describe AI companies as 21st century colonialism.

It is my personal opinion that, for the reasons listed above, it is not possible to use LLMs ethically. Even the scenario of having students use LLMs and then critically examine their output still involves the forms of exploitation noted above.

Going through the considerations above actually reminded me of an old Richard Matheson short story, Button, Button (yes, I’m a sci-fi nerd). It’s really short (9 pages) and you can find a pdf here. In the story, a strange man offers a couple a push-button unit tied to a wooden box. If the couple pushes the button, they get a nice chunk of money, but someone they don’t know will die. You can read the story to see how that turns out. Anyhoo, think of it this way: every time you press the button, a community in Chile (or some other country where LLM companies subcontract work and data centers) loses access to water.

None of what I have just described is new or unknown. There is already ample literature on this. But what often happens is that any presentation on AI, there will be a few slides about the issues with LLMs. Then, that lip service having been paid, the presentation will move on to using AI, and that will be it. And this is the part of the presentation that no one will remember nor discuss.

“But it’s convenient”. The convenience is not free. There are externalities that we don’t see nor perceive. The time you saved is resources spent by someone else, a ghost worker, a data labeler, the environment.

But that’s not all that is wrong with LLMs, but to me, that is enough that I cannot, in good conscience, use them, nor recommend nor, in any way, encourage their use.

But that’s not all. There’s everything pertaining to LLM impacts on users.

Cognitive Offloading

Cognitive offloading refers to using an LLM to do your thinking for you. You have a task to complete, draft that prompt and after a few iterations, you’re done. Time saved!

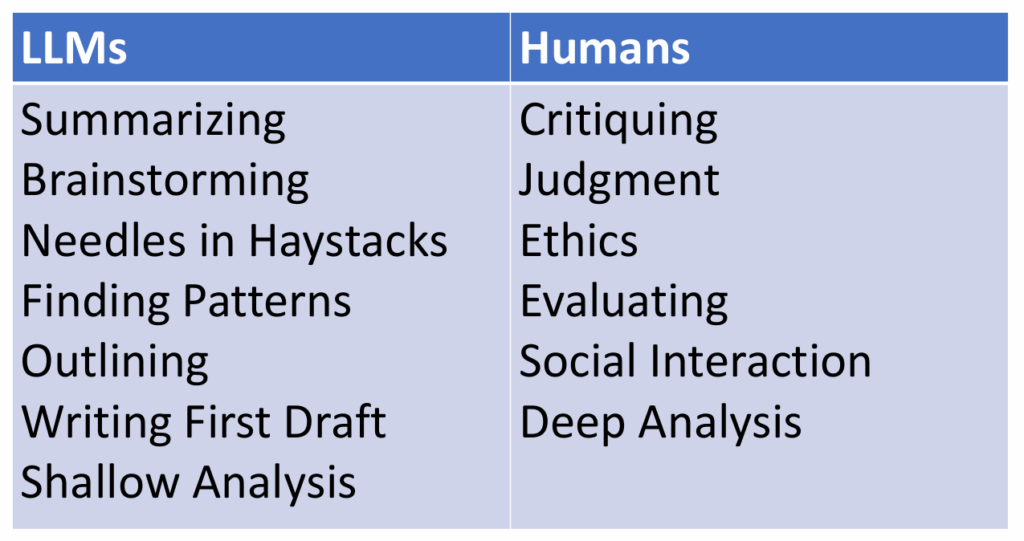

This is the reasoning underlying this slide from one of the presentations at the Teaching Professor conference:

Under this scenario, the LLM does the basic stuff, and you get to save your precious brain cells for the more sophisticated stuff.

Except it does not work that way. I would argue that this scenario only works if you already know how to do the stuff on the left. But I would also add that there is no way to do the stuff on the right if you haven’t learned and gotten used to doing the stuff on the left. We all know there are no shortcuts when it comes to learning. Offloading one’s cognitive effort to a machine is not a learned skill. A skill cannot be taken away. A tool can. What then?

But Wait, There’s More…

The studies and reports are starting to pile up on the deleterious and detrimental effects of LLMs on users, but also the research articles citing non-existent sources or the legal briefs citing non-existent cases or precedents. I’ll provide just a few links:

There is the academic cheating, of course. This happens so much that LLM usage decreases in the summer when students are not in school. I don’t really need to expand on that.

We also already know the impact of smart phones and social media on attention spans, but the omnipresence of AI summaries also has detrimental impacts: AI summaries cause ‘devastating’ drop in audiences, online news media told. Along with the fact that Americans no longer really read, that’s really sad.

However, enjoy your future conversations with students regarding your deviations from Grammarly’s predicted grades.

There are more stories similar to this where people anthropomorphize the LLM that feeds their egos and fantasies, for instance, this (see the many links in the article).

More generally, I really recommend this Charlie Warzel piece AI Is a Mass-Delusion Event (the COD library has an institutional subscription to the Atlantic), as well as Ian Bogost piece College Students Have Already Changed Forever (also in the Atlantic).

But Employers Want Students with AI Skills

I would argue that crafting prompts is not a skill (no, there’s no such thing a prompt engineering). Using LLMs is not a skill. In the context of learning, it’s a shortcut to having to struggle to develop a skill. Again, a skill cannot be taken away. A tool can. If the LLM providers decide to make their tools unaffordable (as they will), or, say, the bubble burst, will our students be better off having gotten dependent on LLMs or having developed the actual skills needed? Skilled workers are expensive, though, and that is what’s at stake here. And the gains from using AI may fail to materialize, AI Is Failing at an Overwhelming Majority of Companies Using It, MIT Study Finds.

Final Thoughts

There is a lot more that I could add (such as the quality of LLM output) but this is already far too long.

Let me close with just a few thoughts.

I find removing humans (with all their imperfections) from interactions disturbing. It’s dehumanizing and a contributor to what Cory Doctorow calls enshittification (book coming out in October, wikipedia article here). However, this is well in line with the ideologies of the founders and funders mentioned above.

At COD, we have always made it a point of pride to say that we’re the place where students get taught not by an overworked and underpaid TA, but by a fully credentialed professor who wants to teach undergraduates, with a reasonable class size.

I wish we made the decision to be the college where every part of one’s educational experience involves humans in control.

And if we need help with anything, well, ask your colleagues, your union reps, our LT colleagues, and all the other awesome people we are fortunate to work with, with all their various skills. Let’s turn to other humans.

So these are my reasons to stay away from LLMs. Fortunately for you guys, I have one more academic year in this position. Then, the administration can decide to appoint someone more sanguine about the whole thing.

Thank you for your attention to this matter.